In an age of touch-less interfaces and AI-driven systems, I am excited to share a project that merges computer vision, automation, and web technologies into a practical real-world application: a Gesture-Controlled PowerPoint Presentation System, powered by Python, OpenCV, MediaPipe, and PyAutoGUI, with a modern Web UI built using Flask and WebSocket.

This system empowers presenters to control their PowerPoint slides using simple hand gestures — no physical remote or mouse required. Whether in classrooms, meetings, or conferences, this approach enhances interactivity and freedom of movement, all while embracing the touch-less trend.

How It Works

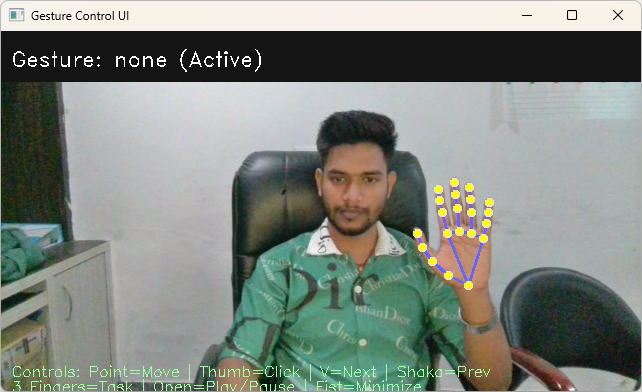

🔍 Gesture Recognition with MediaPipe and OpenCV

The core of the system relies on MediaPipe, a robust real-time hand tracking framework from Google. Using OpenCV, the live webcam feed is analyzed frame by frame to detect specific hand gestures such as:

- ✋ Open palm – Start or Exit

- 👉 Swipe right – Next Slide

- 👈 Swipe left – Previous Slide

🖱️ Slide Control with PyAutoGUI

Once a gesture is recognized, PyAutoGUI simulates keyboard shortcuts like Right Arrow or Left Arrow, mimicking the native controls of PowerPoint or any presentation software.

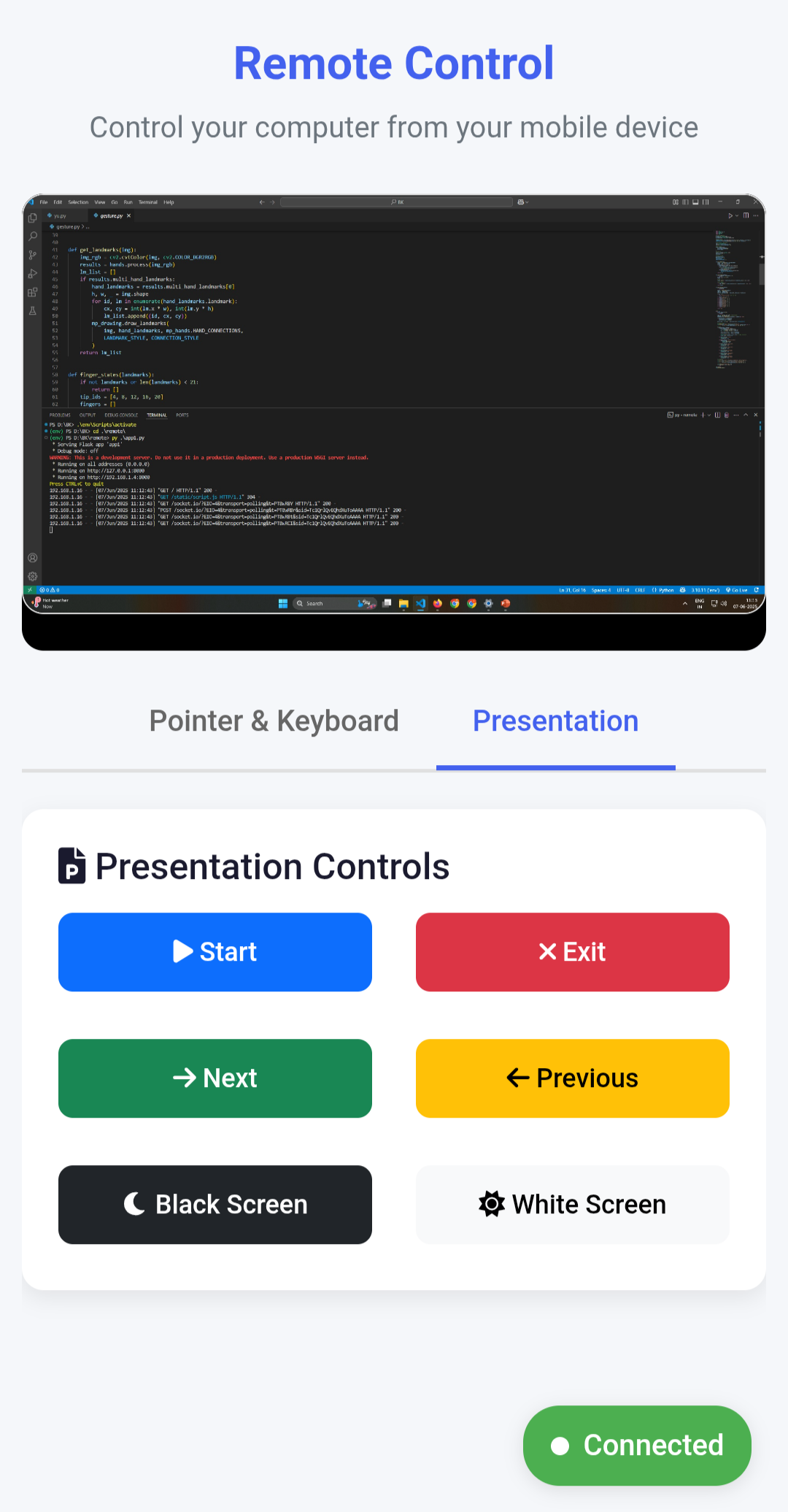

🌐 Web GUI with Flask & WebSocket

To make the experience even more flexible, I developed a web-based GUI using Flask, enhanced with WebSocket for real-time interaction. Users can view the webcam feed, monitor gesture detection status, and remotely toggle presentation control — all from a mobile device or browser. This layer adds modern usability, especially in environments where moving back to a PC isn’t feasible.

Key Features

✅ Touch-less Control – No remotes or physical buttons required

✅ Real-Time Hand Tracking – Powered by MediaPipe for high accuracy

✅ Flask-Based Web Interface – Control and monitor from any device

✅ Cross-Platform – Works on Windows, and can be extended to Linux/macOS

✅ Lightweight & Open Source – Pythonic, simple, and extendable

Applications

- Education – Teachers can move freely while delivering lectures

- Conferences – Seamless navigation of slides without breaking eye contact

- Assistive Tech – Touch-less control for differently-abled individuals

Conclusion

This project exemplifies the fusion of AI-driven interfaces and user-centric design. The goal is not just innovation, but accessible and practical innovation. I’m proud to have built something useful not only for classrooms but for any presentation environment where technology should enhance — not interrupt — the experience.

References

https://ai.google.dev/edge/mediapipe/solutions/vision/hand_landmarker

https://flask.palletsprojects.com/en/stable

https://developer.mozilla.org/en-US/docs/Web/API/WebSockets_API

– Badal Kumar

IT Head & Assistant Professor – Computer Science, Madhav University