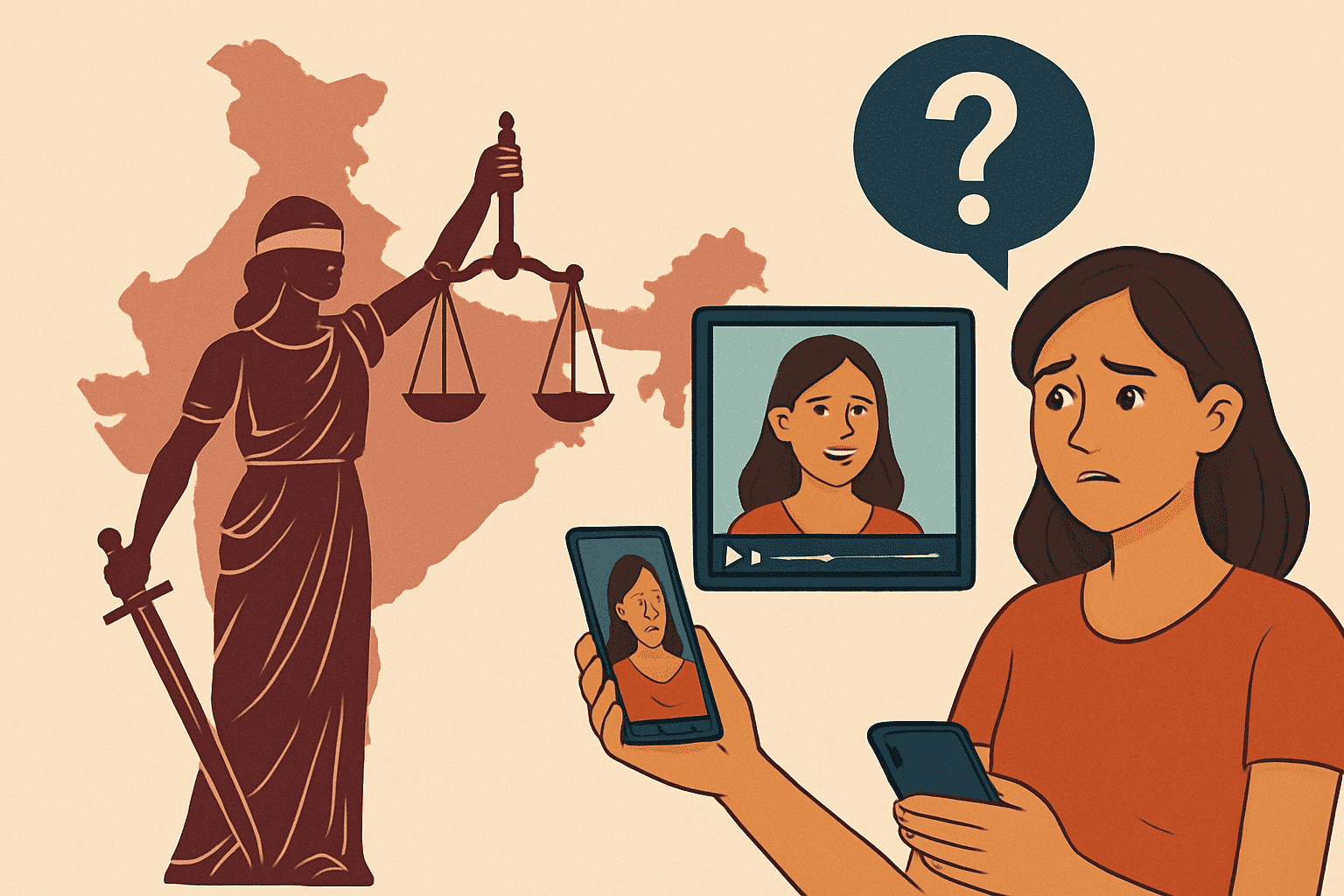

Artificial Intelligence (AI) And Machine Learning-Driven Deep Fake Technology Has Presented Previously Unheard-Of Difficulties For The Legal Frameworks Controlling Digital Identity, Consent, And Privacy. People In India Are At Risk Of Non-Consensual Synthetic Media, Identity Theft, And Reputational Damage Due To The Substantial Legal Void Caused By The Lack Of Explicit Legislation Addressing Deep Fakes. This Essay Critically Analyzes How Inadequate India’s Current Cyber Laws—Such As The Information Technology Act Of 2000 And Its Implementing Provisions—Are To Address The Complex Dangers Posed By Deep Fakes. In The Context Of Deep Fake Usage, It Examines The Moral And Legal Implications Of Consent, Especially When It Comes To Instances Of Fraud, Misinformation, And Non-Consensual Pornography. The Essay Suggests A Complete Framework For India That Strikes A Balance Between Innovation, Freedom Of Speech, And Individual Rights By Drawing On Foreign Regulatory Models, Such As China’s Deep Synthesis Provisions And The European Union’s Artificial Intelligence Act. This Essay Promotes A Strong Policy Response To Protect Consent And Privacy In The Digital Age By Combining Technology Solutions, Legislative Changes, And International Collaboration.

Introduction

The Development Of Hyper-Realistic Synthetic Media Made Possible By Deep Fake Technology, Which Is A Byproduct Of Sophisticated Ai Algorithms Like Generative Adversarial Networks (Gans), Has Revolutionized The Digital Environment. Although Its Uses In Education And Entertainment Are Encouraging, There Are Serious Moral And Legal Issues When It Is Abused For Fraudulent Purposes, Misinformation, And Non-Consensual Material. In India, A Country With A Growing Number Of Digital Citizens And Rising Rates Of Cybercrime, Vulnerabilities Are Made Worse By The Lack Of Specific Laws To Combat Deepfakes. The Idea Of Consent, Which Is Fundamental To Privacy And Individuality, Is Especially Compromised When People’s Likenesses Are Used Without Their Agreement. This Article Examines The Legal Gaps In India’s Cyber Law System, Assesses The Shortcomings Of Current Laws, And Suggests A Progressive Regulatory Structure Based On International Best Practices.

The Deep Fake Phenomenon: Technology And Threats

Deep Fakes Create Artificial Audio, Video, Or Pictures That Are Almost Identical To Real Content By Utilizing Deep Learning And Gans. Global Worry Has Been Raised By The Technology’s Dual-Use Nature, Which May Be Used For Both Malevolent Exploitation And Creative Purposes. High-Profile Incidents In India, Like The 2023 Deep Fake Video Of Star Rashmika Mandanna, Show How Dangerous The Technology Can Be, Especially When It Comes To Invasions Of Privacy And Consent. Numerous Crimes Are Linked To Deep Fakes, Including:

• Non-Consensual Pornography: Women Make Up Over 90% Of Deep Fake Victims, And They Are Frequently The Targets Of Harassment Or Revenge Porn.

• Fraud And Identity Theft: Financial Dangers Are Highlighted By Cases Such As The 2019 Incident In Which A Uk Ceo Was Tricked Into Sending €220,000 By A Deep Faked Voice.

• Propaganda And Disinformation: Deep Fakes Have The Ability To Sway Public Perception, As Demonstrated By The 2022 Fake Video Of Ukrainian President Volodymyr Zelenskyy.

Since People Lose Control Over Their Digital Identities And Sometimes Have No Redress Under India’s Present Legal System, These Risks Call Into Question The Integrity Of Consent.

India’s Cyber Law Landscape: A Fragmented Response

The Information Technology Act, 2000 (It Act), India’s Main Cyber Regulation, Was Enacted Prior To The Development Of Ai-Driven Technologies Like Deep Fakes. Although Certain Clauses Deal With Comparable Charges, They Are Insufficient To Handle The Complexity Of Deep Fakes Misuse:

• Section 66e: Penalties For Violating Privacy By Publishing Private Photos Without Permission Include Up To Three Years In Jail Or A Fine Of Lakh. It Does Not, However, Specifically Address Synthetic Media. Although Sections 67, 67a, And 67b Forbid The Dissemination Of Pornographic Or Sexually Explicit Content, They Are Reactive Policies That Do Not Address The Production Of Deep Fakes.

• Section 66d: Does Not Specifically Address Fraud Caused By Artificial Intelligence, Although It Does Target Impersonation Utilizing Computer Resources.Sections 499 (Defamation), 465 (Forgery), And 509 (Insulting Modesty) Of The Indian Penal Code, 1860, Provide Further Remedies, Although They Are Not Designed To Address Issues Unique To Deep Fakes. The Unapproved Use Of Copyrighted Content In Deep Fakes May Be Covered By The Copyright Act Of 1957, But It Does Not Fully Address Invasions Of Privacy Or Permission.

The Delhi High Court’s Decision In Anil Kapoor V. Simply Life India (2023) Is One Example Of A Judicial Intervention That Has Acknowledged Privacy Infringement And Personality Rights In Deepfake Situations. They Don’t Create A Systematic Framework, Nevertheless, And Are Case-Specific. The 2019 Personal Data Protection Bill Attempted To Safeguard Personal Information, However It Was Unsuccessful Since It Did Not Specifically Address Synthetic Media.

Consent in the Digital Age: Ethical and Legal Dimensions

Deep Fakes Violate The Fundamental Premise Of Consent In Data Protection And Privacy Law By Using Likenesses Without Permission. There Are Significant Ethical Ramifications, Especially In Gendered Situations Where Women Are Disproportionately Harmed. Legally Speaking, Enforcement Is Made More Difficult By The Lack Of Precise Standards For Permission In The Context Of Synthetic Media. For Example, India’s Laws Do Not Specifically Classify Non-Consensual Sexual Deep Fakes—Which Include Face Swaps Or Synthetic Content—As A Type Of Technology-Facilitated Gender-Based Violence.

Deep Fakes Pose A Direct Danger To The Right To Privacy, Which Was Established As A Fundamental Right In Justice K.S. Puttaswamy V. Union Of India (2017). However, Victims Are Forced To Rely On Haphazard Remedies In The Absence Of Particular Legislation, Which Are Frequently Insufficient To Alleviate Psychological, Social, And Economic Losses. The Problem Is Made Worse By The Absence Of Preventative Actions Like Required Labeling Or Detecting Systems.

International Perspectives: Lessons for India

International Reactions To Deep Fakes Provide Insightful Information For India’s Regulatory Strategy:

• European Union: Deep Fakes Are Classified As High-Risk Ai Systems That Must Comply With Disclosure And Transparency Standards Under The Eu’s Artificial Intelligence Act (2023), Which Uses A Risk-Based Methodology. Article 50 Strikes A Balance Between Security And Freedom Of Speech By Exempting Creative And Law Enforcement Applications. Deepfake-Generated Material Is Protected From Unauthorized Use By The General Data Protection Regulation (Gdpr).

• China: The Deep Synthesis Provisions (2023) Require Consent And Labeling For Deepfake Material, And Noncompliance Carries Severe Penalties. Public Trust And Traceability Are Given Top Priority In This Legislation.

• United Jurisdictions: Non-Consensual Deep Fake Pornography Is Illegal In Jurisdictions Like Virginia, While The Deep Fakes Accountability Act (2019) Mandates Watermarking And Labeling.

These Models Stress Proactive Measures, Such As Detection Technology, Content Labeling, And International Engagement, Which India May Apply To Its Setting.

The Legal Vacuum: Challenges And Implications

India’s Lack Of Deep Fake-Specific Laws Leads To A Number Of Issues:

1. Reactive Enforcement: Since There Are Currently No Safeguards Against Creation Or Distribution, Rules Primarily Penalize Abuse After It Has Already Occurred.

2. Platform Accountability: Although Platforms Lack The Resources Or Motivation To Proactively Identify Deep Fakes, The It Rules, 2021, Mandate That Intermediaries Delete Harmful Information Within 36 Hours.

3. Global Character Of Deep Fakes: As Demonstrated By Instances Involving Multinational Platforms, Cross-Border Distribution Makes Enforcement More Difficult.

4. Public Trust: Deep Fakes Undermine Public Trust In Digital Media, Which Might Interfere With Elections And Societal Harmony.

These Deficiencies Show How Urgently A Thorough Legal Framework That Takes Into Account The Sociological And Technological Aspects Of Deep Fakes Is Needed.

Proposed Framework for India

To Remedy The Legal Vacuum, India Must Take A Multidimensional Approach That Includes Legal, Technical, And Sociological Measures:

1. Legislative Reforms

- Implement A Deep Fake-Specific Legislation: This Legislation, Which Is Based On The Eu Ai Act, Ought To: O Group Deep Fakes According To Their Level Of Danger (Malicious Vs Artistic, For Example).

- Mandate Consent For Using Likenesses In Synthetic Media.

Enforce Severe Sanctions, Such As Fines And Incarceration, For Non-Consensual Deep fakes. - Modify The It Act To Include Clauses Defining Consent And Responsibility That Are Specific To Artificial Intelligence And Synthetic Media.

- Strengthen Privacy Laws: Enact The Personal Data Protection Bill, Which Includes Clear Safeguards Against Data Misuse Linked To Deep Fakes.

2. Technological Interventions

- Detection And Authentication: Make An Investment In Ai-Powered Detection Technologies, Such Those That Use Block Chain Technology To Verify Content.

- Content Labeling: As Is Done In China, Mandate That Platforms Label Deep Fake Content.

- Watermarking: To Provide Traceability, Require Watermarking For Media Produced By AI.

3. Platform Responsibility

- Proactive Monitoring: Modify It Regulations To Impose Deep Fake Content Filtering Using Ai.

- Liability For Non-Compliance: Remove Platforms’ Section 79 Immunity If They Don’t Take Swift Action To Stop Deep Fake Material.

4. Public Awareness And Education

- Campaigns for Digital Literacy: Teach People How To Spot Deep fakes And Comprehend Consent In Online Settings.

- Law Enforcement Training: Give Law Enforcement And The Courts The Tools They Need To Deal With Cases Involving Deep Fakes.

5. International Cooperation

- Global Standards: Work Together With China, The Us, And The Eu To Create Uniform Guidelines For Regulating Deep fakes.

- Cross-Border Enforcement: To Combat Transnational Deep fake Crimes, Treaties Should Be Established.

Case Studies: Real-World Implications

- Rashmika Mandanna Case (2023): The Public Was Outraged When the Actor’s Visage Was Placed Onto Sexual Content In A Deep fake Video. Sections 465 And 469 Of The Ipc Were Cited By The Delhi Police, Although Prosecution Was Constrained By The Absence Of Particular Legislation.

- Anil Kapoor V. Simply Life India (2023): The Delhi High Court Established A Precedent For The Legal Recognition Of Deep Fake Injuries By Defending The Actor’s Personality Rights.

- The Rana Ayyub Case Exposed Weaknesses In Safeguarding Victims Of Targeted Harassment By Exposing A Journalist Who Was Subjected To Revenge Porn Using Deepfake Manipulation.

These Incidents Highlight The Necessity Of Proactive Laws To Guard Against Damage And Guarantee Justice.

Balancing Innovation and Regulation

Deep Fakes Are Not Always Harmful; Their Application In Satire, Education, And Cinema Shows Their Creative Potential. A Regulatory Framework Must Safeguard Rights Without Impeding Innovation. An Example Of Striking A Balance Between Responsibility And Freedom Of Expression May Be Seen In The Eu’s Exception For Creative Deep Fakes. The Advantages Of Deep Fake Technology May Be Maximized While Reducing Hazards In India By Promoting Ethical Ai Research Through Public-Private Collaborations.

Conclusion

In The Digital Era, The Emergence Of Deep Fakes Signifies A Paradigm Change In The Way Identity, Privacy, And Permission Are Negotiated. With Its Dependence On Antiquated Legislation And Reactive Measures, India’s Present Cyber Law System Is Ill-Prepared To Handle This Issue. India Can Fill The Legal Void And Protect Individual Rights By Implementing A Holistic Strategy That Incorporates Platform Accountability, Technology Innovation, Legislative Changes, And International Collaboration. In Addition To Guarding Against Deep Fake Abuse, The Suggested Approach Establishes India As A Global Leader In Moral Ai Governance. The Regulations Governing Deep Fake Technology Must Also Change To Keep Consent As A Fundamental Component Of Digital Autonomy.

References

- Jha, P., & Jain, S. (2021). Detecting And Regulating Deepfakes In India: A Legal And Technological Conundrum. Ssrn.

- Vig, S. (2023). Regulating Deepfakes: An Indian Perspective. Digital Commons, University Of South Florida.

- Moreno, F. R. (2024). Generative Ai And Deepfakes: A Human Rights Approach To Tackling Harmful Content. International Review Of Law, Computers & Technology.

- Saraf, K., & Sriram, A. (2024). The Dilemma Of Deepfakes: Expanding The Ambit Of Right To Personality To Regulate Deepfakes In India. Law School Policy Review.

- Pandey, S., & Jadhav, G. (2023). Emerging Technologies And Law: Legal Status Of Tackling Crimes Relating To Deepfakes In India. Scc Times.

- The Hindu. (2023). Regulating Deepfakes And Generative Ai In India.

- Lexology. (2024). Navigating The Legal Landscape Of Ai Deepfakes In India.

- Researchgate. (2024). Navigating The Dual Nature Of Deepfakes: Ethical, Legal, And Technological Perspectives.

- Livelaw. (2023). Deepfakes And Breach Of Personal Data – A Bigger Picture.

- South Asia@Lse. (2020). Deepfakes In India: Regulation And Privacy.

- Chambers Expert Focus. (2024). How India Is Challenging Deepfakes.

- Researchgate. (2024). Deepfakes: New Challenges For Law And Democracy.

- Crime Science Journal. (2024). Threat Of Deepfakes To The Criminal Justice System: A Systematic Review.

- Nliu-Clt. (2024). Dissecting The Framework Of Deep Fakes In India – A Glaring Lacuna.

- Oxford Academic. (2021). Understanding Copyright Issues Entailing Deepfakes In India.

- Bnb Legal. (2023). Deepfake Ai And Its Legal Implications In India.

- Sciencedirect. (2025). Adverse Human Rights Impacts Of Dissemination Of Nonconsensual Sexual Deepfakes.

- Researchgate. (2023). Deepfakes: Deceptions, Mitigations, And Opportunities.

- Academia.Edu. (2023). Legal Implications Of Deepfake Technology: In The Context Of Manipulation, Privacy, And Identity Theft.

- Inderscience Online. (2023). The Danger Of Deepfakes, Indian Laws And Platform Responsibility.

– Manvendra Rajpurohit

Assistant Professor, Law Faculty, Madhav University